|

|

NVIDIA HGX™ H200 8-GPU 7U Server

w/ NVIDIA HGX™ H200 8-GPU (SXM form factor) and 2x 4th/5th Gen Intel® Xeon® Scalable processors

→ Explore

|

|

|

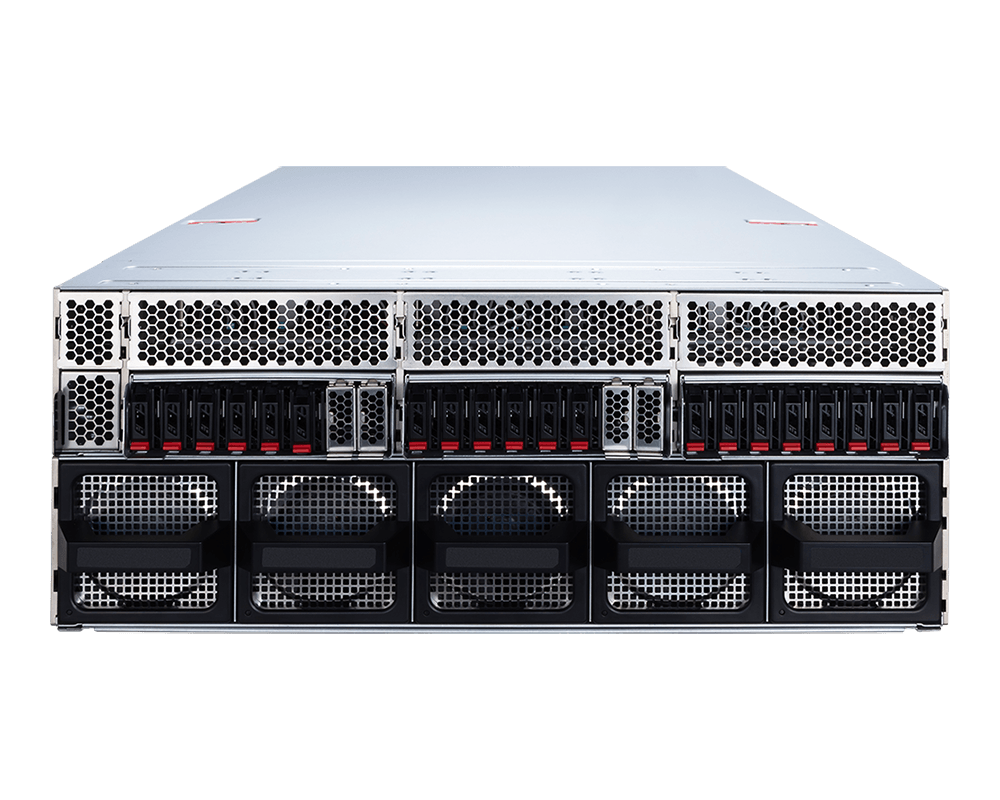

NVIDIA H200 NVL PCIe 8/10-GPU 5U Server

w/ 8x NVIDIA H200 NVL PCIe GPUs and 2x AMD EPYC™ 9005 processors

Explore >

|

|

|

|

|

NVIDIA HGX™ H200 8-GPU SXM5 5U Server

w/ NVIDIA HGX™ H200 8-GPU and 2x AMD EPYC™ 9004/9005 processors

Explore >

w/ NVIDIA HGX™ H200 8-GPU and 2x 4th/5th Gen Intel® Xeon® processors

Explore >

|

|

|

|

|

NVIDIA MGX™ Architecture 4U Server

w/ 8x NVIDIA RTX PRO™ 6000 Blackwell GPUs and 2x Intel® Xeon® 6 processors

Explore >

|

|

|

|

Intel® Xeon® W-3500/W-2500 Workstations

To drive professional workflows, you'll need workstations that can keep pace

Explore >

|

|

|

|

|

High-End GPU Powerhouse

w/ 2x Intel® Xeon® Scalable processors and 4x NVIDIA GPUs

Explore >

|

|

|

|

|

AMD Ryzen™ Threadripper™ PRO Workstations

Imagine What You Can Do - Maximized Cores. Advanced Graphics. Limitless Potential.

Explore >

|

|

|

|

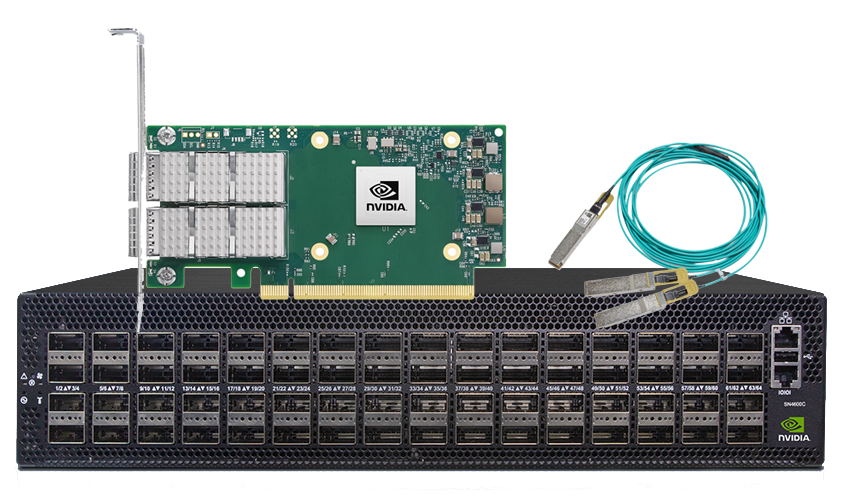

Already have the servers? Start building out your infrastructure with Colfax Direct

Adapters | Switches | Cables | Transceivers

Chelsio | Edgecore | Mellanox/NVIDIA | Netronome | Xilinx

→ Take Me There

|

Get The Outcome You Need

We help accelerate business and research outcomes with expertly engineered solutions.

How? Nearly 38 years of experience, deep domain expertise and flawless execution means robust systems that get you productive right out-of-the box,

letting you focus on innovation and discovery.

→ Who we are